What is Global High Bandwidth Memory (HBM) for AI Chipsets Market?

Global High Bandwidth Memory (HBM) for AI chipsets is a specialized type of memory technology designed to meet the demanding requirements of artificial intelligence applications. HBM is known for its ability to provide high-speed data transfer rates and increased bandwidth, which are crucial for processing large volumes of data quickly and efficiently. Unlike traditional memory solutions, HBM is stacked vertically, allowing for a more compact design and reduced power consumption. This makes it particularly suitable for AI chipsets, which require both high performance and energy efficiency. The global market for HBM in AI chipsets is driven by the growing demand for advanced computing capabilities in various sectors, including data centers, automotive, and consumer electronics. As AI technologies continue to evolve, the need for faster and more efficient memory solutions becomes increasingly important, positioning HBM as a key component in the development of next-generation AI systems. The integration of HBM in AI chipsets not only enhances processing speed but also supports the development of more sophisticated AI models, enabling breakthroughs in machine learning, natural language processing, and computer vision. As a result, the global HBM market is poised for significant growth, driven by the increasing adoption of AI technologies across various industries.

HBM2, HBM2E, HBM3, HBM3E, Others in the Global High Bandwidth Memory (HBM) for AI Chipsets Market:

HBM2, HBM2E, HBM3, and HBM3E represent different generations and advancements in High Bandwidth Memory technology, each offering unique features and improvements over its predecessors. HBM2, the second generation of HBM, introduced enhancements in bandwidth and capacity, making it a popular choice for high-performance computing applications. It offers a bandwidth of up to 256 GB/s per stack, which is significantly higher than traditional memory solutions. HBM2E, an evolution of HBM2, further increases the bandwidth and capacity, providing up to 410 GB/s per stack. This makes HBM2E ideal for applications that require even greater data throughput, such as advanced AI and machine learning models. HBM3, the third generation of HBM, takes performance to the next level with even higher bandwidth and improved power efficiency. It supports a bandwidth of up to 819 GB/s per stack, enabling faster data processing and reduced latency. HBM3E, an enhanced version of HBM3, offers additional improvements in speed and efficiency, making it suitable for the most demanding AI applications. These advancements in HBM technology are crucial for supporting the growing complexity of AI models and the increasing demand for real-time data processing. As AI applications continue to evolve, the need for high-performance memory solutions like HBM2, HBM2E, HBM3, and HBM3E becomes increasingly important. Each generation of HBM technology builds upon the strengths of its predecessors, offering improved performance, capacity, and efficiency to meet the needs of modern AI chipsets. The development of HBM technology is driven by the need to process large volumes of data quickly and efficiently, enabling breakthroughs in AI research and development. As a result, HBM continues to play a critical role in the advancement of AI technologies, providing the necessary memory bandwidth and capacity to support the next generation of AI applications. The evolution of HBM technology reflects the ongoing efforts to push the boundaries of what is possible in AI computing, paving the way for new innovations and discoveries in the field. As the demand for AI technologies continues to grow, the importance of high-performance memory solutions like HBM2, HBM2E, HBM3, and HBM3E cannot be overstated. These advancements in memory technology are essential for enabling the development of more sophisticated AI models and applications, driving progress in areas such as machine learning, natural language processing, and computer vision. The global market for HBM in AI chipsets is poised for significant growth, driven by the increasing adoption of AI technologies across various industries. As AI applications become more complex and data-intensive, the need for high-performance memory solutions like HBM becomes increasingly critical. The development of HBM technology is a testament to the ongoing efforts to meet the demands of modern AI computing, providing the necessary bandwidth and capacity to support the next generation of AI applications. As a result, HBM continues to play a vital role in the advancement of AI technologies, enabling breakthroughs in research and development and paving the way for new innovations in the field.

Servers, Networking Products, Consumer Products, Others in the Global High Bandwidth Memory (HBM) for AI Chipsets Market:

The usage of Global High Bandwidth Memory (HBM) for AI chipsets spans across various sectors, each benefiting from the unique advantages that HBM offers. In the realm of servers, HBM is instrumental in enhancing the performance of data centers, which are the backbone of modern computing infrastructure. Servers equipped with HBM can handle large datasets more efficiently, reducing latency and improving the speed of data processing. This is particularly important for AI applications that require real-time data analysis and decision-making. By integrating HBM into server architectures, data centers can achieve higher throughput and energy efficiency, which are critical for managing the growing demands of AI workloads. In networking products, HBM plays a crucial role in supporting the high-speed data transfer requirements of modern communication systems. As networks become more complex and data-intensive, the need for faster and more efficient memory solutions becomes paramount. HBM provides the necessary bandwidth to support high-speed data transmission, enabling seamless communication between devices and systems. This is essential for applications such as 5G networks, where low latency and high data rates are crucial for delivering high-quality services. In consumer products, HBM is increasingly being used to enhance the performance of devices such as smartphones, tablets, and gaming consoles. These devices require high-speed memory solutions to support advanced features and applications, such as augmented reality, virtual reality, and high-definition video streaming. By integrating HBM into consumer electronics, manufacturers can deliver products that offer superior performance and user experience. This is particularly important in a competitive market where consumers demand the latest technology and features. In addition to servers, networking products, and consumer electronics, HBM is also used in other areas such as automotive and industrial applications. In the automotive sector, HBM is used to support advanced driver-assistance systems (ADAS) and autonomous driving technologies, which require high-speed data processing and real-time decision-making. In industrial applications, HBM is used to enhance the performance of robotics and automation systems, enabling faster and more efficient operations. The versatility of HBM makes it a valuable component in a wide range of applications, each benefiting from its high bandwidth and energy efficiency. As the demand for AI technologies continues to grow, the usage of HBM in various sectors is expected to increase, driving further advancements in memory technology and AI applications. The integration of HBM into AI chipsets is a testament to the ongoing efforts to meet the demands of modern computing, providing the necessary performance and efficiency to support the next generation of AI applications. As a result, HBM continues to play a critical role in the advancement of AI technologies, enabling breakthroughs in research and development and paving the way for new innovations in the field.

Global High Bandwidth Memory (HBM) for AI Chipsets Market Outlook:

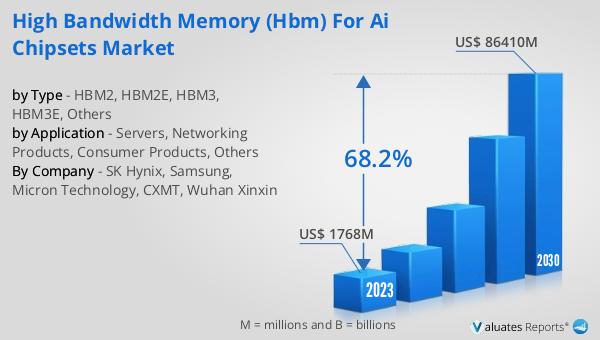

In 2024, the global market for High Bandwidth Memory (HBM) tailored for AI chipsets was valued at approximately $3,816 million. This market is anticipated to undergo substantial growth, reaching an estimated size of $139,450 million by 2031. This remarkable expansion is expected to occur at a compound annual growth rate (CAGR) of 68.2% throughout the forecast period. This impressive growth trajectory underscores the increasing demand for high-performance memory solutions in AI applications. The rapid adoption of AI technologies across various industries is a key driver of this market expansion. As AI models become more complex and data-intensive, the need for efficient and high-speed memory solutions like HBM becomes increasingly critical. The ability of HBM to provide high bandwidth and low power consumption makes it an ideal choice for AI chipsets, enabling faster data processing and improved performance. This market outlook highlights the significant potential for growth in the HBM market, driven by the ongoing advancements in AI technologies and the increasing demand for high-performance computing solutions. As industries continue to embrace AI, the role of HBM in supporting these technologies will become even more crucial, paving the way for new innovations and breakthroughs in the field.

| Report Metric | Details |

| Report Name | High Bandwidth Memory (HBM) for AI Chipsets Market |

| Accounted market size in year | US$ 3816 million |

| Forecasted market size in 2031 | US$ 139450 million |

| CAGR | 68.2% |

| Base Year | year |

| Forecasted years | 2025 - 2031 |

| by Type |

|

| by Application |

|

| Production by Region |

|

| Consumption by Region |

|

| By Company | SK Hynix, Samsung, Micron Technology, CXMT, Wuhan Xinxin |

| Forecast units | USD million in value |

| Report coverage | Revenue and volume forecast, company share, competitive landscape, growth factors and trends |