What is Global HBM Chips for AI Servers Market?

The Global HBM Chips for AI Servers Market is a rapidly evolving sector that focuses on the use of High Bandwidth Memory (HBM) chips in artificial intelligence (AI) servers. HBM chips are a type of memory technology that offers high-speed data transfer and low power consumption, making them ideal for AI applications that require massive data processing capabilities. These chips are stacked vertically to increase bandwidth and reduce latency, which is crucial for AI servers that need to handle complex computations and large datasets efficiently. The market for HBM chips in AI servers is driven by the increasing demand for advanced AI solutions in various industries, including healthcare, finance, and automotive. As AI technology continues to advance, the need for more powerful and efficient memory solutions like HBM chips is expected to grow, making this market a key area of focus for technology companies and investors alike.

HBM2, HBM2E, HBM3, HBM3E, Others in the Global HBM Chips for AI Servers Market:

HBM2, HBM2E, HBM3, and HBM3E are different generations of High Bandwidth Memory (HBM) technology, each offering improvements in performance, capacity, and efficiency. HBM2 is the second generation of HBM technology, providing higher bandwidth and lower power consumption compared to its predecessor. It is widely used in AI servers due to its ability to handle large amounts of data quickly and efficiently. HBM2E, an enhanced version of HBM2, offers even higher bandwidth and capacity, making it suitable for more demanding AI applications. HBM3 is the third generation of HBM technology, offering significant improvements in speed, capacity, and power efficiency. It is designed to meet the increasing demands of AI servers, providing faster data transfer rates and higher memory capacity. HBM3E, an enhanced version of HBM3, offers further improvements in performance and efficiency, making it ideal for the most demanding AI applications. Other types of HBM technology, such as HBM4, are also being developed to meet the future needs of AI servers. These advancements in HBM technology are crucial for the development of more powerful and efficient AI servers, enabling them to handle increasingly complex computations and larger datasets. As AI technology continues to evolve, the demand for advanced memory solutions like HBM2, HBM2E, HBM3, and HBM3E is expected to grow, driving the growth of the Global HBM Chips for AI Servers Market.

CPU+GPU Servers, CPU+FPGA Servers, CPU+ASIC Servers, Others in the Global HBM Chips for AI Servers Market:

The usage of Global HBM Chips for AI Servers Market can be seen in various types of servers, including CPU+GPU servers, CPU+FPGA servers, and CPU+ASIC servers. CPU+GPU servers combine the processing power of central processing units (CPUs) and graphics processing units (GPUs) to handle complex AI computations. HBM chips are used in these servers to provide high-speed data transfer and low latency, enabling them to process large datasets quickly and efficiently. CPU+FPGA servers combine CPUs with field-programmable gate arrays (FPGAs), which are customizable hardware components that can be programmed to perform specific tasks. HBM chips are used in these servers to provide the high bandwidth and low power consumption needed for efficient AI processing. CPU+ASIC servers combine CPUs with application-specific integrated circuits (ASICs), which are specialized hardware components designed for specific tasks. HBM chips are used in these servers to provide the high-speed data transfer and low latency needed for efficient AI processing. Other types of servers, such as those used in data centers and cloud computing, also use HBM chips to provide the high bandwidth and low power consumption needed for efficient AI processing. The use of HBM chips in these servers enables them to handle increasingly complex AI computations and larger datasets, driving the growth of the Global HBM Chips for AI Servers Market.

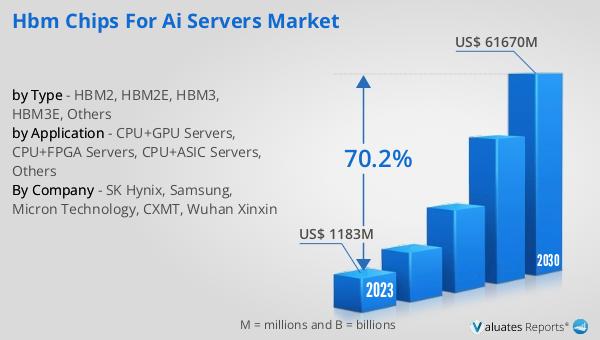

Global HBM Chips for AI Servers Market Outlook:

The global HBM Chips for AI Servers market was valued at US$ 1183 million in 2023 and is anticipated to reach US$ 61670 million by 2030, witnessing a CAGR of 70.2% during the forecast period 2024-2030. This significant growth is driven by the increasing demand for advanced AI solutions across various industries, including healthcare, finance, and automotive. As AI technology continues to evolve, the need for more powerful and efficient memory solutions like HBM chips is expected to grow, making this market a key area of focus for technology companies and investors alike. The advancements in HBM technology, such as HBM2, HBM2E, HBM3, and HBM3E, are crucial for the development of more powerful and efficient AI servers, enabling them to handle increasingly complex computations and larger datasets. The use of HBM chips in various types of servers, including CPU+GPU servers, CPU+FPGA servers, and CPU+ASIC servers, is driving the growth of the Global HBM Chips for AI Servers Market. As the demand for advanced AI solutions continues to grow, the market for HBM chips in AI servers is expected to expand significantly, providing numerous opportunities for technology companies and investors.

| Report Metric | Details |

| Report Name | HBM Chips for AI Servers Market |

| Accounted market size in 2023 | US$ 1183 million |

| Forecasted market size in 2030 | US$ 61670 million |

| CAGR | 70.2% |

| Base Year | 2023 |

| Forecasted years | 2024 - 2030 |

| by Type |

|

| by Application |

|

| Production by Region |

|

| Consumption by Region |

|

| By Company | SK Hynix, Samsung, Micron Technology, CXMT, Wuhan Xinxin |

| Forecast units | USD million in value |

| Report coverage | Revenue and volume forecast, company share, competitive landscape, growth factors and trends |